Key figures in artificial intelligence and digital technology have published an open letter calling for a six-month pause in developing AI systems more powerful than OpenAI's ChatGPT 4.

According to a World Economic Forum article, the signatories to the letter, published by the Future of Life Institute, warned that "advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources".

The letter has been signed by more than 1,400 people, including Apple co-founder Steve Wozniak, Turing Prize winner professor Yoshua Bengio and Stuart Russell, Director of the Center for Intelligent Systems at Berkeley University.

The letter was also signed by Elon Musk, who co-founded OpenAI, the developer of ChatGPT. Musk's foundation also provides funding to the organisation that published the letter. A number of researchers at Alphabet's DeepMind added their names to the list of signatories.

The letter accused AI labs of rushing into the development of systems with greater intelligence than humans without properly weighing up the potential risks and consequences for humanity.

"Recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control," the letter stated.

The signatories to the letter called for AI developers to work alongside governments and policy-makers to create robust regulatory authorities and governance systems for AI.

In an apparent response to the open letter, Sam Altman, CEO of OpenAI, whose ChatGPT-4 has led the development of AI in recent months, posted a tweet, saying that the things we need for a good AGI future are “the technical ability to align a superintelligence”, “sufficient coordination among most of the leading AGI [artificial general intelligence] efforts”, and “an effective global regulatory framework including democratic governance”.

The tweet essentially summarises a blog post by Altman dated 24th February 2023. In the blog, Altman says his company's mission is "to ensure that artificial general intelligence – AI systems that are generally smarter than humans – benefits all of humanity".

Altman also acknowledged the potential risks of hyper-intelligent AI systems, citing "misuse, drastic accidents and societal disruption". The OpenAI CEO went on to detail his company's approach to mitigating those risks.

"As our systems get closer to AGI, we are becoming increasingly cautious with the creation and deployment of our models. Our decisions will require much more caution than society usually applies to new technologies and more caution than many users would like. Some people in the AI field think the risks of AGI (and successor systems) are fictitious; we would be delighted if they turn out to be right, but we are going to operate as if these risks are existential."

Dubai to host inaugural Global Government Cloud Forum

Dubai to host inaugural Global Government Cloud Forum

Honda, Nissan aim to merge by 2026 in historic pivot

Honda, Nissan aim to merge by 2026 in historic pivot

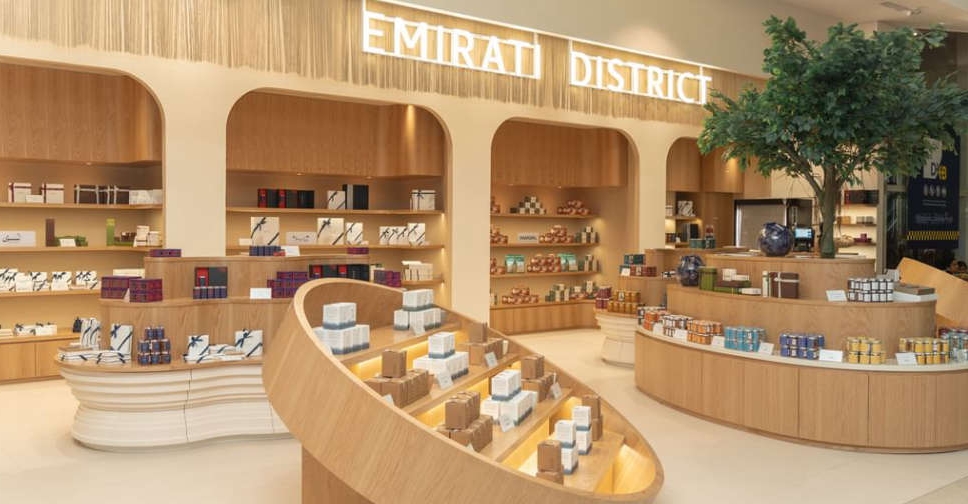

New hub for Emirati businesses launches at DXB

New hub for Emirati businesses launches at DXB

ADNOC drilling expands fleet with new jack-up rigs

ADNOC drilling expands fleet with new jack-up rigs